The Science DZ is a network or a portion of network, designed to facilitate the transfer of big science data.

Today’s general-purpose networks, also referred to as enterprise networks, are capable of efficiently transporting basic data. These networks support multiple missions, including organizations’ operational services such as email, procurement systems, and web browsing. However we may find some difficulties when transferring terabyte and petabyte-scale science data.

Common Design goals of Science DMZ and Campus Enterprise Network

1) Serve a large number of users and platforms: desktops, laptops, mobile devices, supercomputers, tablets, etc.

2) Support a variety of applications: email, browsing, voice, video, procurement systems, and others.

3) Provide security against the multiple threats that result from the large number of applications and platforms.

4) Provide a level of Quality of Service (QoS) that satisfies user expectations.

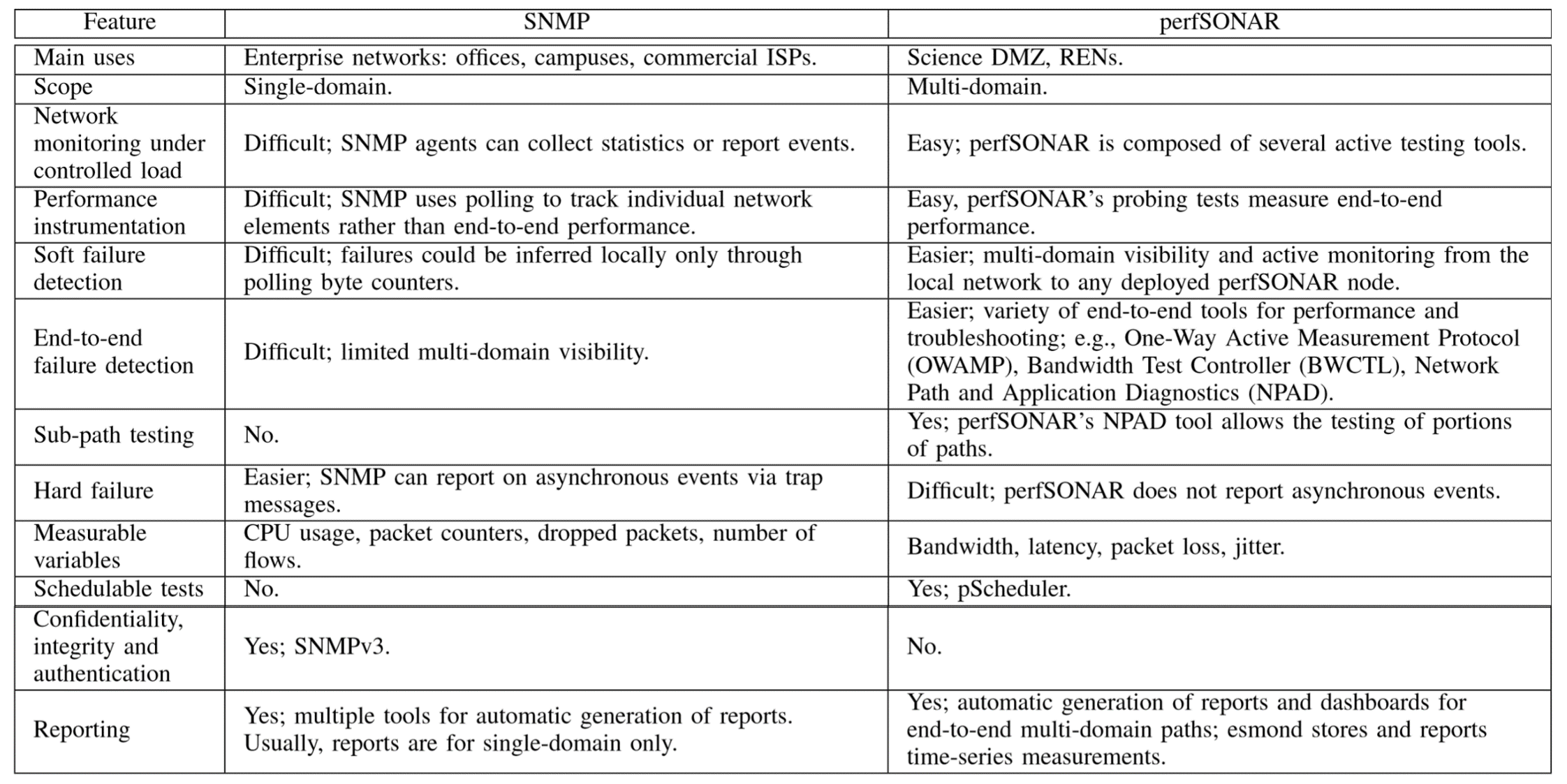

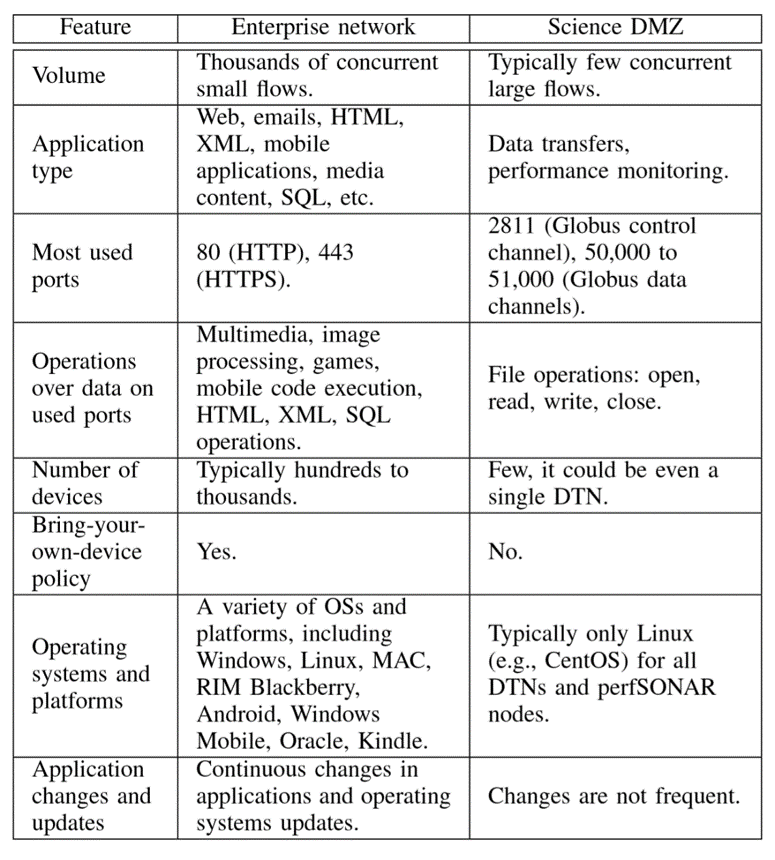

Science DMZ compared to a Campus Enterprise Network

Science DMZ connection to the WAN involves few network devices as possible, to minimize the possibility of packet losses at intermediate devices. The Science DMZ can also be considered as a security architecture, because it limits the application types and corresponding flows supported by end devices. While flows in Enterprise Networks are numerous and diverse, those in Science DMZs are usually well-identified, enabling security policies to be tied to those flows.

Definition: A flow is defined as a set of IP packets passing an observation point in the network during a certain time interval.

Security-Related Differences Between Enterprise Networks and Science DMZs

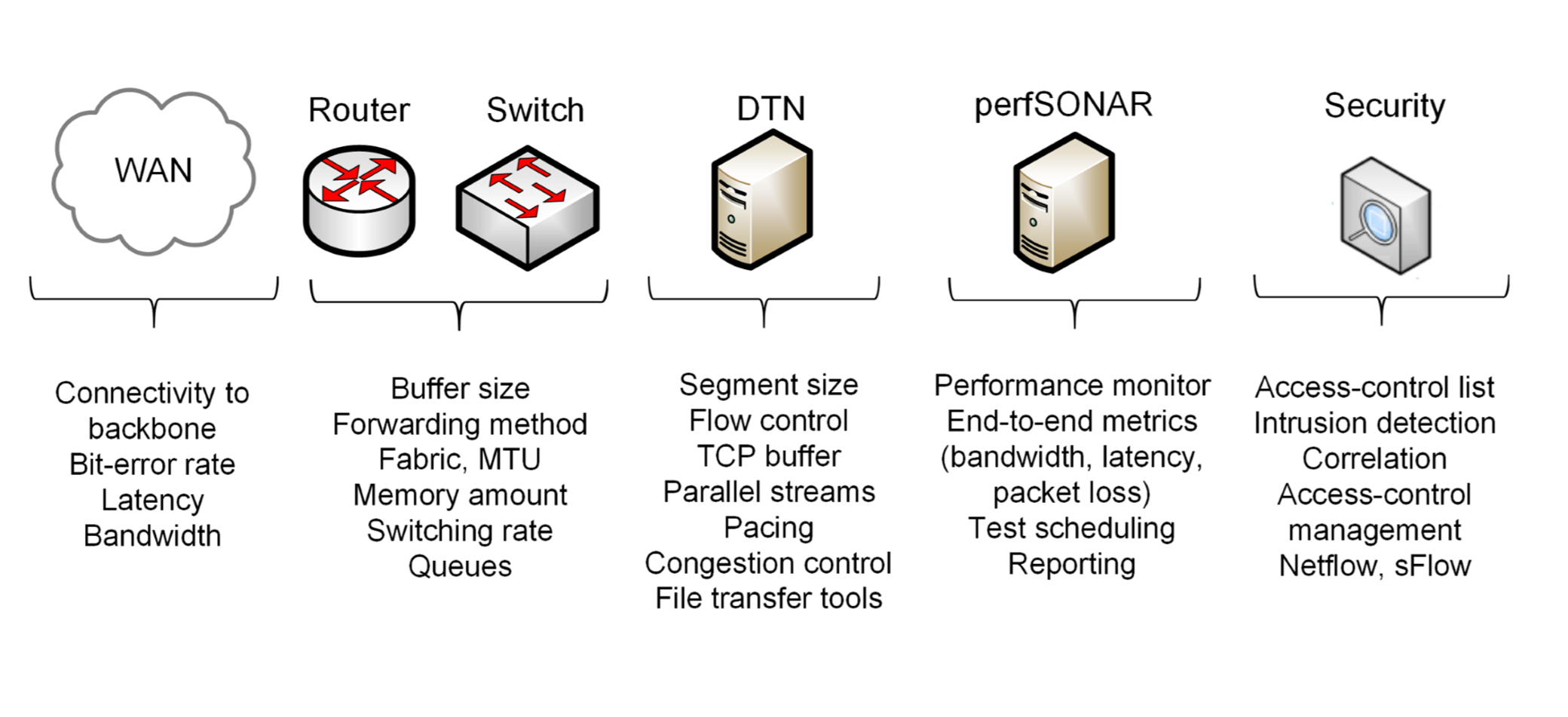

Inventory of the Science DMZ features

The main characteristics of a Science DMZ are the deployment of a friction-free path between end devices across the WAN, the use of DTNs, the active performance measurement and monitoring of the paths between the Science DMZ and the collaborator networks, and the use of access-control lists (ACLs) and offline security appliances.

1 . Friction-free network path

- The path has no devices that may add excessive delays or cause the packet to be delivered out of order; e.g., firewall, IPS, NAT.

- The rationale for the Science DMZ design choice is to prevent any packet loss or retransmission which can trigger a decrease in TCP throughput.

- Data Transfer Nodes (DTNs) are connected to remote systems, such as collaborators’ networks, via the WAN.

- The high-latency path is composed of routers and switches which have large buffer sizes to absorb transitory packet bursts and prevent losses.

2 . Dedicated high-performance DTNs

- Are typically Linux devices built and configured for receiving WAN transfers at high speed.

- They use optimized data transfer tools such as Globus.

- General-purpose applications (e.g. email clients, document editors, media players) are not installed.

- Having a narrow and specific set of applications simplifies the design and enforcement of security policies.

3 . Performance measurement and monitoring point

The tool most used for this is the pefSONAR toolkit. It provides an automated mechanism to actively measure end-to-end metrics such as throughput, latency, and packet loss. Contributing factors are:

- A primary high-capacity path that connects the Science DMZ with the WAN.

- Maintain a healthy path by identifying and eliminating soft failures in the network.

- Soft failures occur with minimum impact on, basic connectivity but high throughput can no longer be achieved.

- Examples of soft failures include failing components and routers forwarding packets using the main CPU rather than the forwarding plane.

4 . ACLs and offline security appliances

The primary method to protect a Science DMZ is via router’s Access Control Lists (ACLs). ACLs are implemented in the forwarding plane of a router and do not compromise the end-to-end throughput. Additional offline appliances include payload-based and flow-based intrusion detection systems (IDSs).

Characteristics of the Data Link Layer

- Enables data movement over a link from one device to another.

- Media access control.

- Packet addressing.

- Formatting the frame that is used to encapsulate data.

- Error notification on physical layer.

- It orders bits and packets to and from data segments. This ensuing result is called frame. They contain data that are set in an orderly manner.

- Ensure error-free communication between two devices by correct transmission of frames.

Characteristics of the Network Layer

- Establishes paths that are used for the transfer of data packets between network devices.

- Provide a raffic direction.

- Addressing; Service and logical network addresses.

- Routing.

- Packet switching.

- Controlling packet sequence.

- Complete error detection; from sender to receiver.

- Congestion control.

- Gateway services.

Characteristics of the Transport Layer

- Responsible for message delivery between the network hosts. Messages are fragmented and reassembled by this layer. It also controls the reliability of any given link.

- Guaranteed delivery of data.

- Name resolution.

- Flow control.

- Error detection and recovery.

Characteristics of the Application Layer

- It works as an interface between the software and the network protocol on the computer. It provides services that are necessary to support the applications.

- This layer provides interface for FTP applications.

Data link and Network Layers devices

The essential functions performed by a router are

- Routing: determination of the route taken by packets.

- Forwarding:switching of a packet from the input port to the appropriate output port.

Traditional routing approaches such as static and dynamic routing (Open Shortest Path First (OSPF), BGP ) are used in the implementation of Science DMZs.

Routing events, such as routing table updates, occur at the millisecond, second, or minute timescale, on the other hand with transmission rates of 10 Gbps and above, the forwarding operation occurs at the nanosecond timescale.

Best practices used in regular enterprise networks are applicable to Science DMZs.

Switching

The above image is a generic router architecture. Modern routers may have a network processor (NP) and a table derived from the routing table in each port, which is referred to as the forwarding table (FT) or forwarding information base (FIB).

Router queues/buffers absorb traffic fluctuations. Even in the absence of congestion, fluctuations are present, resulting mostly from coincident traffic bursts.

To avoid HOL blocking, many switches use output buffering, a mixture of internal and output buffering, or techniques emulating output buffering such as Virtual Output Queueing (VOQ).

There are critical switching attributes that must be considered for a well-designed Science DMZ. These attributes are related to the characteristics of the science DMZ traffic and the role of switches in mitigating packet losses.

Traffic Profile:

At a switch, buffer size, forwarding or switching rate, and queues should be selected based on the traffic profile to be supported by the network. Enterprise networks and Science DMZs are subject to different traffic profiles.

Enterprise flows are less sensitive than Science DMZ flows to packet loss and throughput requirements. Typically, the size of files in enterprise applications is small. Even though packet losses reduce the TCP throughput, from a user perspective this reduction results in a modest increase of the data transfer time. On the other hand, Science DMZ applications typically transfer terabyte-scale files. Hence, even a very small packet loss rate can cause the TCP throughput to collapse below 1 Gbps, as a result, a terabyte-scale data transfer requires many additional hours or days to complete.

A well-designed Science DMZ is minimally sensitive to latency. One of the goals of the Science DMZ is to prevent packet loss and thus to sustain high throughput over high latency WANs. Hence, the Science DMZ uses dedicated DTNs and switches capable of absorbing transient bursts. It also avoids inline security appliances that may cause packets to be dropped or delivered out of order. By fulfilling these requirements, the achievable throughput can approach the full network capacity.

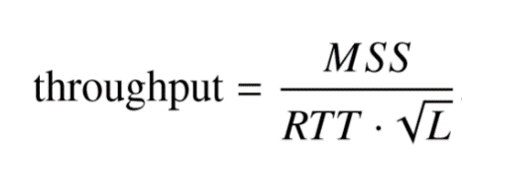

Maximum Transmission Unit : The MTU has a prominent impact on TCP throughput.

The throughput is directly proportional to the MSS. Congestion control algorithms perform multiple probes to see how much the network can handle. With high speed networks, using half a dozen or so small probes to see how the network responds wastes a huge amount of bandwidth. Similarly, when a packet loss is detected, the rate is decreased by a factor of two. TCP can only recover slowly from this rate reduction. The speed at which the recovery occurs is proportional to the MTU. Thus, for Science DMZs, it is recommended to use large frames.

Buffer size of output or transmission ports:

The buffer size of a router’s output port must be large enough, since packets from coincident arrivals from different input ports may be forwarded to the same output port. Additionally, buffers prevent packet losses when traffic bursts occur.

‘ buffer size = C · RTT ‘

It is also know as bandwidth-delay product (BDP).

Bufferbloat:

While allocating sufficient memory for buffering is desirable, it is also important to note that the term RTT in the above equation depends upon the use case at hand. Hence, allocating additional unneeded buffer space may result in more latency. This undesirable latency phenomenon is known as bufferbloat and can be mitigated by avoiding the over-allocation of buffers.

Routers and switches in a hierarchical network:

Above is a hierarchical network, The access layer represents the network edge, where traffic enters or exits the network. In Science DMZs, usually DTNs, supercomputer, and research labs have access to the network through access layer switches. The distribution layer interfaces between the access layer and the core layer, aggregating traffic from the access layer. The core layer is the network backbone. Core routers forward traffic at very high speeds. In this simplified topology, the core is also the border router, connecting the network to the WAN.

Access-layer switches must support a range of traffic capacity needs, sometimes starting as low as 10 Mbps and reaching to as much as 100 Gbps. This wide mix can strain the choice of buffers required, particularly on output switch ports connecting to the distribution layer. Specifically, buffer sizes must be large enough to absorb bursts from the end devices (DTNs, supercomputer, lab devices).

Distribution- and core-layer switches must have as much buffer space as possible to handle the bursts coming from the access-layer switches and from the WAN. Hence, attention must be paid to bandwidth capacity changes (e.g. aggregation of multiple smaller input ports into a larger output port).

Switches manufactured for datacenters may not be a good choice for Science DMZs. They often use fabrics based upon shared memory designs. In these designs, the size of the output buffers may not be tunable, which may become a key performance limitation during the transfer of large flows.

Comparison of Transport-Layer Features in Enterprise Networks and Science DMZS

Network layer issues

The table above shows a comparison between enterprise networks and Science DMZs, regarding transport-layer features. Reliability is required for file and dataset transfers. Thus, Science DMZ applications use TCP. TLS and SSL also offer reliable service and security on top of TCP, they introduce additional overhead and a redundant service. Globus, a well-known application-layer tool for transferring large files, offers confidentiality, integrity and authentication services.

The flow control rate is managed by the TCP buffer size. For Science DMZ applications, the buffer size must be greater than or equal to the bandwidth-delay product. With this buffer size, TCP behaves as a pipelined protocol. On the other hand, general-purpose applications often use a small buffer size, which produces a stop-and-wait behavior.

If the TCP buffer size at DTNs is smaller than the bandwidth-delay product, the utilization of the channel is lower than 100%. The sender must constantly wait for acknowledgement segments before transmitting additional data segments. On the other hand, if the buffer size is greater than or equal to the bandwidth-delay product, the path utilization approaches the maximum capacity and many datasegments are allowed to be in transit while acknowledgement segments are simultaneously received. For small and short-duration flows, this may not be essential. However, for large flows, to achieve full performance, the buffer size must be at least equal to the bandwidth-delay product. The MSS is perhaps one of the most important features in high-throughput high-latency networks with packet losses. TCP pacing is a promising feature. The challenge for its wide adoption is the complexity of developing a mechanism to discover the bottleneck link and its capacity.

Application layer tools

The essential end devices inside a Science DMZ are the DTNs and the performance monitoring stations. DTNs run a data transfer tool while monitoring stations run a performance monitoring application, typically perfSONAR. Other useful tools at deployment and evaluation times are WAN emulation and throughput measurement applications. These tools are convenient because they facilitate early performance evaluation without a need of connecting the Science DMZ to a real WAN.

File Transfer Applications for Science DMZs :

The prevalent tool for science data transfers is Globus gridFTP. The following description corresponds to Globus, many of its features apply to other applications recommended for Science DMZs.

Other file transfer applications for big data are Multicore Aware Data Transfer Middleware FTP (mdtmFTP) and Fast Data Transport (FDT). mdtmFTP is designed to efficiently use the multiple computing cores (multicore CPUs) on a single chip that are common in modern computer systems. mdtmFTP also improves the throughput in DTNs that use a non-uniform memory access(NUMA) model. In the traditional Uniform Memory Access (UMA) model, the access to the RAM from any CPU or core takes the same amount of time.

FDT is an application optimized for the transfer of a large number of files. Hence, thousands of files can be sent continuously, without restarting the network transfer between files. However, FDT and mdtmFTP have not been widely adopted despite encouraging performance results .

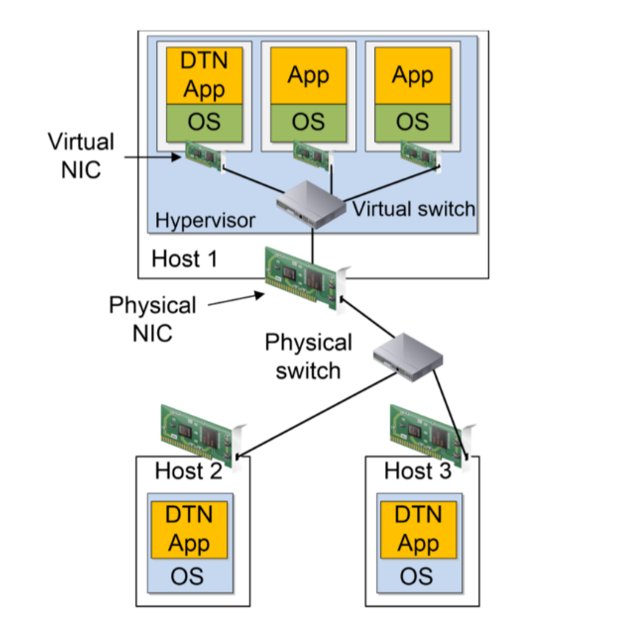

Virtual Machines and Science DMZs

The idea behind a virtual machine is to abstract the hardware of a computer into several execution environments. As a physical resource, access to a NIC is also shared.

While virtual technologies have been widely adopted in enterprise networks, their use in Science DMZs has been discouraged for several reasons.

- The hypervisor represents a software layer that adds processing overhead.

- The physical NIC is potentially shared among multiple virtual machines.

- Even if the virtual DTN is the only virtual machine running on a physical server, the CPU must be shared with the hypervisor and the virtual switch.

- Commercial vendors may not disclose important attributes of the virtual switch, such as buffer size and switching architecture.

Taking the limitations stated above into consideration, virtualization is not recommended for Science DMZs operating at speeds above 10 Gbps.

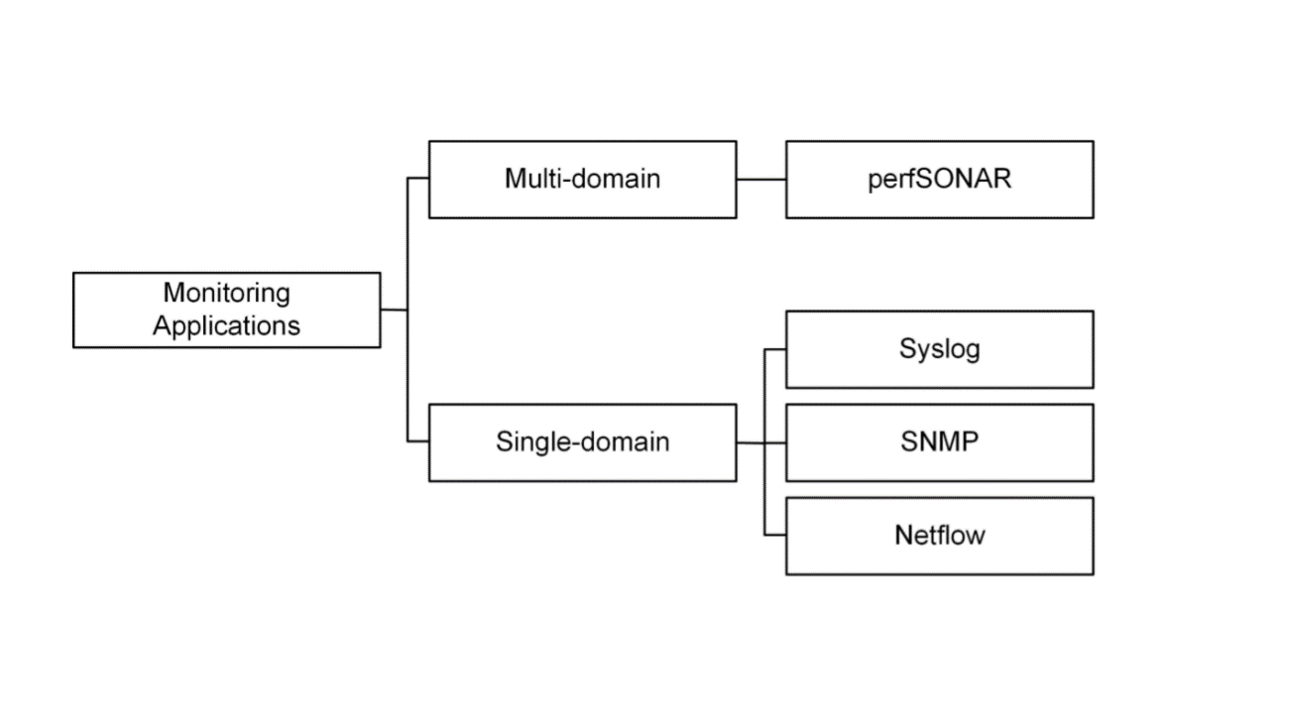

Monitoring and performance applications for science DMZs

- One of the essential elements of a Science DMZ is the performance measurement and monitoring point.

- The monitoring process in Science DMZs focuses on multi-domain end-to-end performance metrics.

- On the other hand, the monitoring process in enterprise networks focuses on single-domain performance metrics.

perfSONAR application

- It helps locate network failures and maintain optimal end-to-end usage expectations.

- It offers several services, including automated bandwidth tests and diagnostic tools.

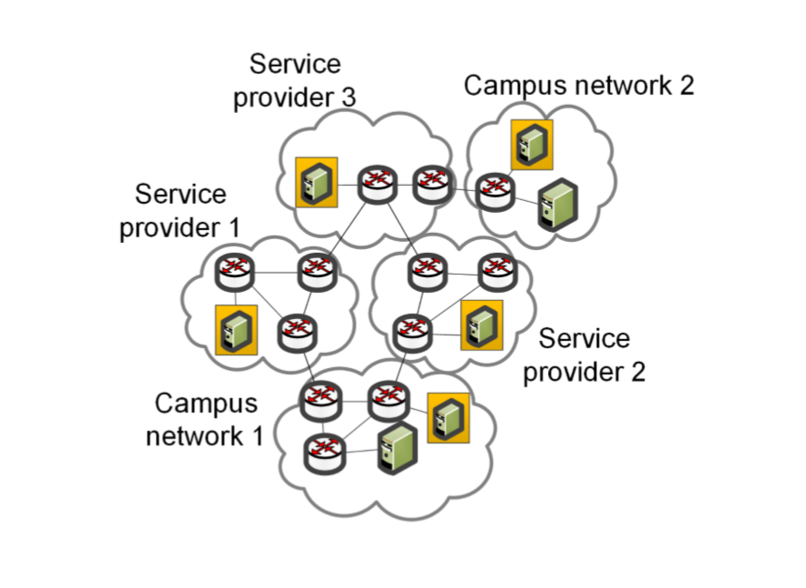

- One of its main feature is its cooperative nature by which an institution can measure several metrics (e.g., throughput, latency, packet loss) to different intermediary domains and to a destination network.

Based on the below figure, campus network 1 can measure metrics from itself to campus network 2. Campus network 1 can also measure metrics to the service providers.

Comparisons can be drawn between SNMP and perfSONAR